What is the Need of Pig?

1.Who don’t know java , then can learn and write Pig script.

2.10 lines of Pig = 200 lines of Java

3.It has built in operations like Join, Group, Filter, Sort and more…

Why we have to go for Pig when we have Map Reduce

1.Because of performance on par with Raw Hadoop

2.Hadoop will take 20 lines of code = 1 line of Pig

3.Hadoop development time is 16 minutes = 1 minute of Pig

Map-Reduce

1.Powerful model for parallelism

2.Based on a rigid procedural structure

3.Provides a good opportunity to parallelize algorithm

Pig

1.It is desirable to have a higher level declarative language.

2.Similar to SQL query where the user specifies “what” and leaves the “how” to the underlying process engine.

Why Pig

1.Java Not Required

2.Can take any type of data like structured or semi structured data.

3.Easy to learn, write and read. Because it is similar to SQL, Reads like series of steps

4.It can extensible by UDF from Java, Python, Ruby and Java script

5.It provides common data operations filters, joins, ordering etc. and nested data types tuples, bags and maps, which is missing in MapReduce.

6.An ad-hoc way of creating and executing map-reduce jobs on very large data sets

7.Open source and actively supported by a community of developers.

Where should we use Pig?

1.Pig is data flow language

2.It is on the top of Hadoop and makes it possible to create complex jobs to process large volumes of data quickly and efficiently

3.It is used in Time Sensitive Data Loads

4.Processing Many Data Sources

5.Analytic Insight Through Sampling.

Where not to use Pig?

1.Really nasty data formats or completely unstructured data(video, audio, raw human-readable text)

2.Perfectly implemented MapReduce code can sometimes execute jobs slightly faster than equally well written Pig code.

3.When we would like more power to optimize our code.

What is Pig?

1.Pig is a open source high level data flow system

2.It provides a simple language queries and data manipulation Pig Latin, that is compiled into map-reduce jobs that are run on Hadoop

Why Is it Important?

1.Companies like Yahoo, Google and Microsoft are collecting enormous data sets in the form of clicks of streams, search logs and web crawls.

2.Some form of ad-hoc processing and analysis of all this information is required.

Where we will use Pig?

1.Processing of Web Logs

2.Data processing for search platforms

3.Support for Ad hoc queries across large datasets.

4.Quick Prototyping of algorithms for processing large data sets.

Conceptual Data flow for Analysis task

How Yahoo uses Pig?

1.Pig is the best suited for the data factory

Data Factory contains

Pipelines:

1.Pipelines bring logs from Yahoo’s web servers

2.These logs are undergo a cleaning steps where boots, company internal views and clicks are removed.

Research:

1.Researchers want to quickly write a script to test theory

2.Pig integration with streaming makes it easy for researchers to take a Perl or Python script and run it against a huge dataset.

Use Case in Health care

1.Take DB Dump in csv format and ingest into HDFS

2.Read CSV file from HDFS using Pig Script

3.De-identify columns based on configurations and store the data back in csv file using Pig script.

4.Store De-identified SCV file into HDFS.

Pig – Basic Program structure.

Script:

1.Pig can run a script file that contains Pig commands

Ex: pig script.pig runs the commands in the file script.pig.

Grunt:

1.Grunt is an interactive shell for running the Pig commands.

2.It is also possible to run Pig scripts from within Grunt using run and exec(execute)

Embedded:

1.Embedded can run Pig programs from Java , much like we can use JDBC to run SQL programs from Java.

Pig Running modes:

1.Local Mode -> pig –x local

2.MapReduce or HDFS mode -> pig

Pig is made up of Two components

1.Pig

a.Pig Latin is used to express Data Flows

2.Execution Environments

a.Distributed execution on a Hadoop Cluster

b.Local execution in a single JVM

Pig Execution

1.Pig resides on User machine

2.Job executes on Hadoop Cluster

3.We no need to install any extra on Hadoop cluster.

Pig Latin Program

1.It is made up of series of operations or transformations that are applied to the input data to produce output.

2.Pig turns the transformations into a series of MapReduce Jobs.

Basic Types of Data Models in Pig

1.Atom

2.Tuple

3.Bag

4.Map

a)Bag is a collection of tuples

b)Tuple is a collection of fields

c)A field is a piece of data

d)A Data Map is a map from keys that are string literals to values that can be any data type.

Example: t= (1,{(2,3),(4,6),(5,7)},[‘apache’:’search’])

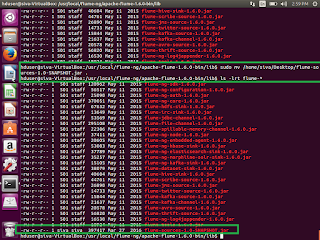

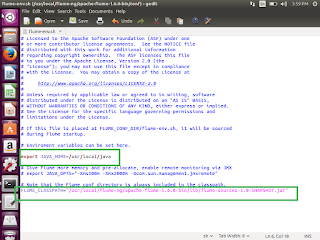

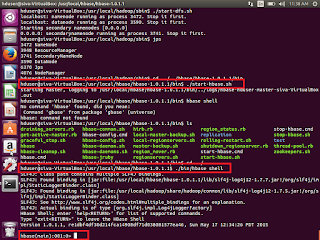

How to install Pig and start the pig

1.Down load Pig from apache site

2.Untar the same and place it where ever you want.

3.To start the Pig , type pig in the terminal and it will give you pig grunt shell

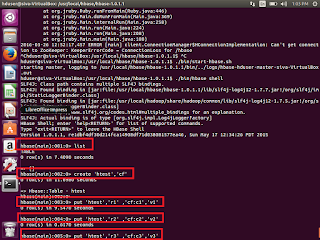

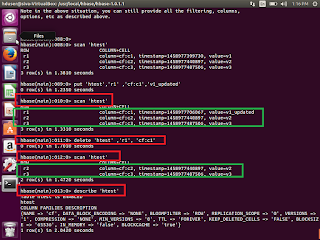

Demo:

1.Create directory pig_demo under /home/usr/demo/ mkdir pig_demo

2.Go to /home/usr/demo/pig_demo

3.Create a 2 text files A.txt and B.txt

4.gedit A.txt

add the below type of data in A.txt file.

0,1,2

1,7,8

5.gedit B.txt add the below type of data in B.txt file

0,5,2

1,7,8

6.Now we need to move these 2 files into hdfs

7.Create a directory in HDFS

Hadoop fs –mkdir /user/pig_demo8.Copy A.txt and B.txt files into HDFS

Hadoop fs –put *.txt /user/pig_demo/9.Start the pig

pig –x local

or

pig

10.We will get Grunt shell, using grunt shell we will load the data and do the operations the same. grunt> a= LOAD ‘/user/pig_demo/A.txt’ using PigStorage(‘,’);

grunt> b= LOAD ‘/user/pig_demo/B.txt’ using PigStorage(‘,’);

grunt> dump a;

// we will load the data and it will display in Pig

grunt> dump b;

grunt> c= UNION a,b;

grunt> dump c;

11.We can change the A.txt file data and again we will place it into HDFS using hadoop fs –put A.txt /user/pig_demo/

And load the data again using grunt shell, then union the a,b files . then combine 2 files using UNION and then dump the C. This is how we can do the load the data instantly.

//If we want split data into column wise 0 column values split into d and e

grunt> SPLIT c INTO d IF $0 == 0 , e IF $0 == 1

grunt> dump d;

grunt> dump e;

grunt> lmt = LIMIT c 3;

grunt> dump lmt;

grunt> dump c;

grunt> f= FILTER c BY $1 > 3;

grunt> dump f;

grunt> g = group c by $2;

grunt> dump g;

We can load the data in different format

grunt> a= LOAD ‘/user/pig_demo/A.txt’ using PigStorage(‘,’) as (int:a1 , int:a2 , int:a3);

grunt> b= LOAD ‘/user/pig_demo/B.txt’ using PigStorage(‘,’) as (int:b1 , int:b2 , int:b3);

grunt> c= UNION a,b;

grunt> g = group c by $2;

grunt> f= FILTER c BY $1 > 3;

grunt> describe c;

grunt> h= GROUP c ALL;

grunt> i= FOREACH h GENERATE COUNT($1);

grunt> dump h;

grunt> dump i;

grunt> j = COGROUP a BY $2 , b BY $2;

grunt> dump j;

grunt> j = join a by $2 , b by $2;

grunt> dump j;

Pig Latin Relational Operators

1.Loading and storing

a.LOAD- Loads data from the file system or other storage into a relation

b.STORE-Saves a relation to the file system or other storage

c.DUMP-Prints a relation to the console

2.Filtering

a.FILTER- Removes unwanted rows from a relation

b.DISTINCT – Removes duplicate rows from a relation

c.FOREACH .. GENERATE – Adds or removes fields from a relation.

d.STREAM – Transforms a relation using an external program.

3.Grouping and Joining

a.JOIN – Join two or more relations.

b.COGROUP – Groups the data in two or more relations

c.GROUP – Group the data in a single relation

d.CROSS – Creates a Cross product of two or more relations

4.Sorting

a.ORDER – Sorts a relation by one or more fields

b.LIMIT – Limits the size of a relation to the maximum number of tuples.

5.Combining and Splitting

a.UNION – Combines two or more relations into one

b.SPLIT – Splits a relation into two or more relations.

Pig Latins – Nulls

1.In Pig , when a data element is NULL , it means the value is unknown.

2.Pig includes the concept of a data element being NULL.(Data of any type can be NULL)

Pig Latin –File Loaders

1.BinStorage – “binary” storage

2.PigStorage – Loads and stores data that is delimited by something

3.TextLoader – Loads data line by line (delimited by newline character)

4.CSVLoader – Loads CSV files.

5.XMLLoader – Loads XML files.

Joins and COGROUP

1.JOIN and COGROUP operators perform similar functions

2.JOIN creates a flat set of output records while COGROUP creates a nested set of output records.

Diagnostic Operators and UDF Statements

1.Types of Pig Latin Diagnostic Operators

a.DESCRIBE – Print’s a relation schema.

b.EXPLAIN – Prints the logical and physical plans

c.ILLUSTRATE – Shows a sample execution of the logical plan, using a generated subset of the input.

2.Types of Pig Latin UDF Statements

a.REGISTER – Registers a JAR file with the Pig runtime

b.DEFINE-Creates an alias for a UDF, streaming script , or a command specification

grunt>describe c;

grunt> explain c;

grunt> illustrate c;

Pig –UDF(User Defined Function)

1.Pig allows other users to combine existing operators with their own or other’s code via UDF’s

2.Pig itself come with some UDF’s. Few of the UDF’s are large number of standard string –processing, math, and complex-type UDF’s were added.

Pig word count example

1.sudo gedit pig_wordcount.txt data will be as like this hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju hive mapreduce pig Hbase siva raju // create directory inside HDFS and place this file hadoop fs –mkdir /user/pig_demo/pig-wordcount_input //put pig_wordcount.txt file hadoop fs –put pig_wordcount.txt /user/pig_demo/pig-wordcount_input/ //list the files inside the mentioned directory hadoop fs –ls /user/pig_demo/pig-wordcount_input/ //load the data into pig grunt> A = LOAD ‘/user/pig_demo/pig-wordcount_input/ pig_wordcount.txt’; grunt> dump A; grunt> B = FOREACH A generate flatten (TOKENIZE((character)$0)) as word; grunt> dump B; grunt> C = Group B by word; grunt> dump C; grunt> D = foreach C generate group, COUNT(B); grunt> dump D;How to write a pig script and execute the same

sudo gedit pig_wordcount_script.pig //We need to add the below command to the script file. A = LOAD ‘/user/pig_demo/pig-wordcount_input/ pig_wordcount.txt’; B = FOREACH A generate flatten (TOKENIZE((character)$0)) as word; C = Group B by word; D = foreach C generate group, COUNT(B); STORE D into ‘/user/pig_demo/pig-wordcount_input/ pig_wordcount_output.txt’; dump D; //Once completed then we need to execute the pig script pig pig_wordcount_script.pig

Create User defined function using java.

1. Open eclipse – create new project – New class-

Import java.io.IOException; Import org.apache.pig.EvalFunc; Import org.apache.pig.data.Tuple; public class ConvertUpperCase extends EvalFuncOnce done the coding , then we need to export as jar. Place it where ever you want.{ public String exec(Tuple input)throws IOException{ If(input == null || input.size()==0){ return null; } try{ String str = (String)input.get(0); return str.toUpperCase(); } catch(Exception ex){ throw new IOException(“Caught exception processing row”); } } }

How to Run the jar from through pig script.

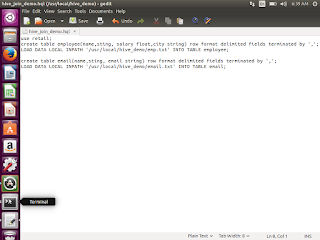

1.Create one udf_input.txt file - > sudo gedit udf_input.txt

Siva raju 1234 bangalore Hadoop Sachin raju 345345 bangalore data Sneha raju 9775 bangalore Hbase Navya raju 6458 bangalore Hive2.Create pig_udf_script.pig script - sudo gedit pig_udf_script.pig

3.Create one directory called pig_udf_input

hadoop fs –mkdir /user/pig_demo/pig-udf_input //put pig_wordcount.txt file hadoop fs –put udf_input.txt /user/pig_demo/pig-udf_input/4.Open the pig_udf_script.pig file

REGISTER /home/usr/pig_demo/ ConvertUpperCase.jar; A=LOAD ‘/user/pig_demo/pig-udf_input/ udf_input.txt’ using PigStorage (‘\t’) as (FName:chararray,LName:chararray,MobileNo:chararray,City:chararray,Profession:chararray); B=FOREACH A generate ConvertUpperCase($0), MobileNo, ConvertUpperCase(Profession), ConvertUpperCase(City); STORE B INTO ‘‘/user/pig_demo/pig-udf_input/ udf_output.txt’ DUMP B;Run the UDF script using the following command.

pig pig_udf_script.pig