This post will explain you about, flume installation , retrieve tweets to HDFS, Twitter app creation for development.

1.Download latest flume

2.Untar the downloaded tar file in which ever location you want.

sudo tar -xvzf apache-flume-1.6.0-bin.tar.gz

3.Once you have done the above steps, u can start the ssh localhost, if not connected to ssh server

4.Start the dfs ./start-dfs.sh

5.Start the ./start-yarn.sh

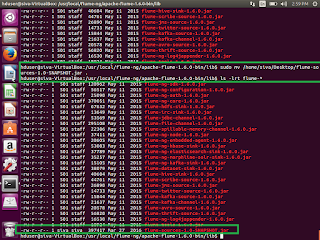

6.Go up to bin folder where flume has been extracted

7.Here I am extracted flume under /usr/local/flume-ng/apache-flume-1.6.0-bin

8.First Download the flume-sources-1.0-snapshot.jar and move that jar into inside /usr/local/flume-ng/apache-flume-1.6.0-bin/lib/

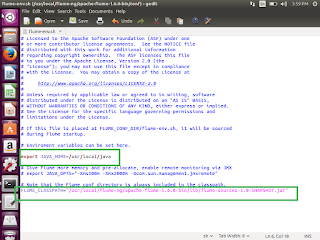

9.Once we have done this, Now we need to set the java path and snapshot.jar path details in flume-env.sh

/usr/local/flume-ng/apache-flume-1.6.0-bin/conf>sudo cp flume-env.sh.template flume-env.sh /usr/local/flume-ng/apache-flume-1.6.0-bin/conf> sudo gedit flume-env.sh

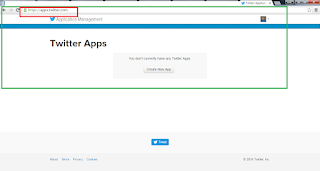

10.Now we need to register our application with twitter dev

11.open the twitter , if you are not having sign in details, then please signup the same. Once you have signed up then use the twitter apps

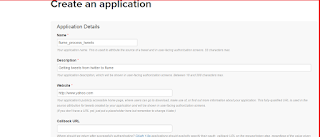

12. Click on Create New App Enter the required details

13. Check the I Agree checkbox

14.Once application has been created then twitter page will be look like this.

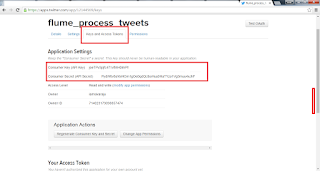

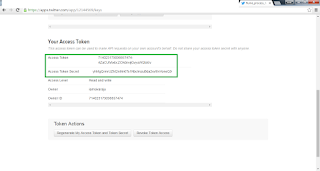

15.Click on the Keys and Access Tokens tab and copy consumer key and consumer secret key and paste any notepad

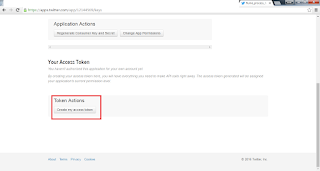

16.Click Create my access token

17.It will generate access token and access token secret, copy these 2 values and place it notepad

18.Create flume.conf file under /usr/local/flume-ng/apache-flume-1.6.0-bin/conf and paste the below details.

# Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, # software distributed under the License is distributed on an # "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY # KIND, either express or implied. See the License for the # specific language governing permissions and limitations # under the License. # The configuration file needs to define the sources, # the channels and the sinks. # Sources, channels and sinks are defined per agent, # in this case called 'TwitterAgent' TwitterAgent.sources = Twitter TwitterAgent.channels = MemChannel TwitterAgent.sinks = HDFS TwitterAgent.sources.Twitter.type = com.cloudera.flume.source.TwitterSource TwitterAgent.sources.Twitter.channels = MemChannel TwitterAgent.sources.Twitter.consumerKey = joeTPv3pjfc471vfMH0lmP TwitterAgent.sources.Twitter.consumerSecret = PydW6v8aYoiHOm1gOe0qdQUboHua9HaTYzo1Vg3muu4xJhF TwitterAgent.sources.Twitter.accessToken = 714023179098857474-4ZaCUhAxbcZCKdnvijGvyuWQteEv TwitterAgent.sources.Twitter.accessTokenSecret = yhMgQrmrUZht2nMn6Ts1NbclmzuBda2xvtIIvVoneQ TwitterAgent.sources.Twitter.keywords = hadoop, big data, analytics, bigdata, cloudera, data science, data scientiest, business intelligence, mapreduce, data warehouse, data warehousing, mahout, hbase, nosql, newsql, businessintelligence, cloudcomputing TwitterAgent.sinks.HDFS.channel = MemChannel TwitterAgent.sinks.HDFS.type = hdfs TwitterAgent.sinks.HDFS.hdfs.path = hdfs://localhost:9000/user/flume/tweets/%Y/%m/%d/%H/ TwitterAgent.sinks.HDFS.hdfs.fileType = DataStream TwitterAgent.sinks.HDFS.hdfs.writeFormat = Text TwitterAgent.sinks.HDFS.hdfs.batchSize = 1000 TwitterAgent.sinks.HDFS.hdfs.rollSize = 0 TwitterAgent.sinks.HDFS.hdfs.rollCount = 10000 TwitterAgent.channels.MemChannel.type = memory TwitterAgent.channels.MemChannel.capacity = 10000 TwitterAgent.channels.MemChannel.transactionCapacity = 100

19. Once that is done , then we need to run the twitter agent in flume.

/usr/local/flume-ng/apache-flume-1.6.0-bin>./bin/flume-ng agent -n TwitterAgent -c conf -f /usr/local/apache-flume-1.6.0-bin/conf/flume.conf

20. once it is started , wait for some time and click the ctrl+C and now it's time to see the tweets in HDFS file.

21. Open the browser which is there in unix machine and browse the same. go to /user/flume/tweets and see the tweets

http://localhost:5007522. we can see data similar as shown in twitter, then the unstructured data has been streamed from twitter on to HDFS successfully. Now we can do analytics on this twitter data using Hive.

This is how we can bring live tweets data into HDFS and we can do the analytics using hive.

Thank you very much for viewing this post